Nottingham Open Data 6

|

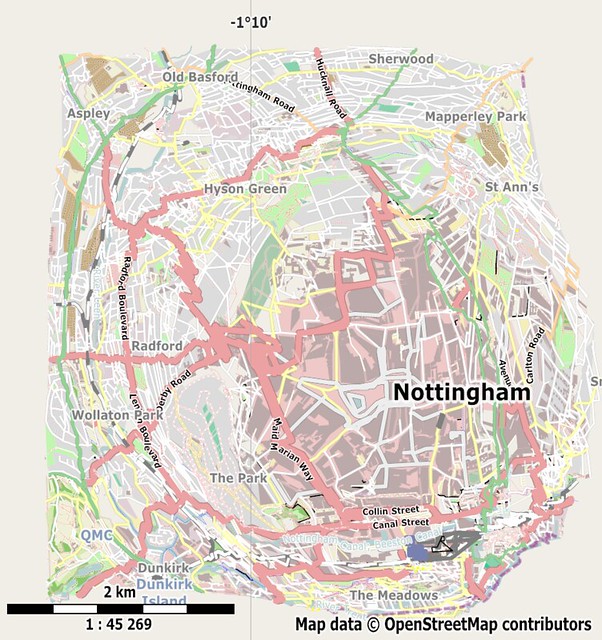

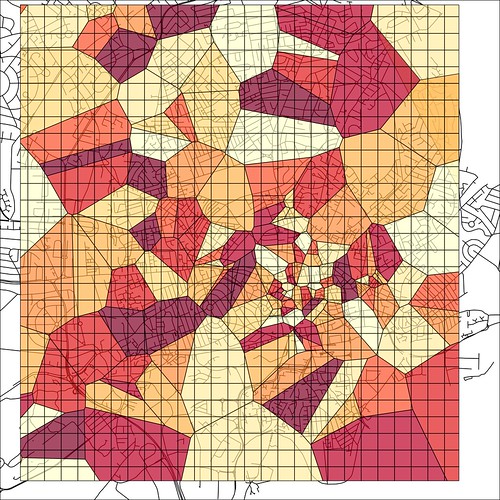

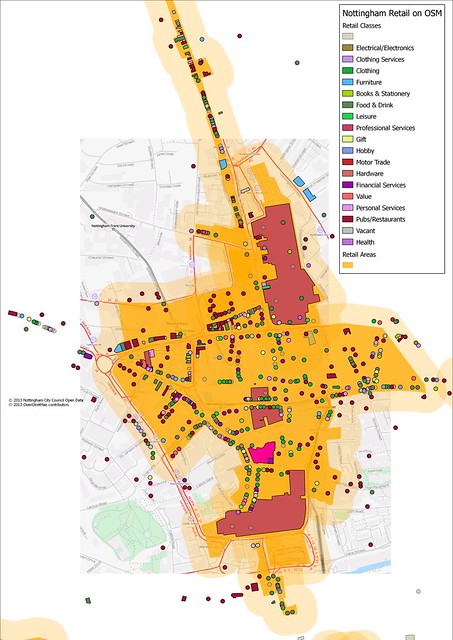

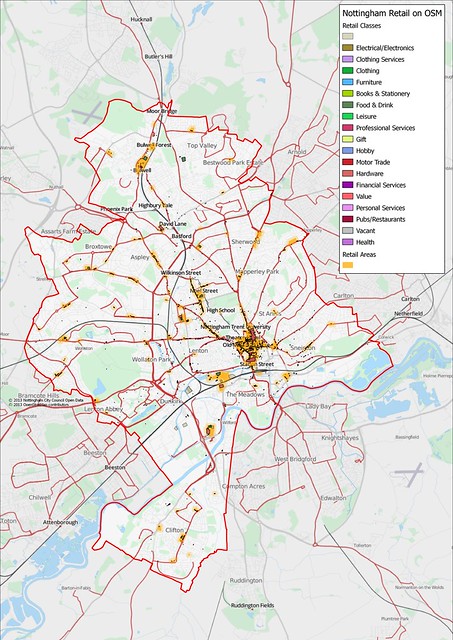

| Nottingham City Centre retail areas with mapped retail units (shops, banks, pubs, restaurants, cafes, fast food outlets etc.; the large areas are shop=mall (an unsatisfactory tag)). |

|

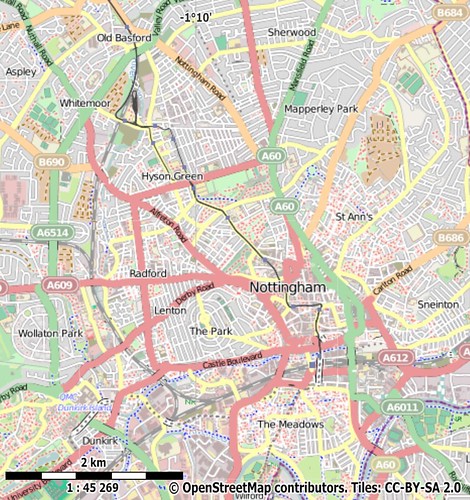

| Retail landuse and retail outlets in the city of Nottingham (a buffer is used to accentuate smaller retail outlets) |

This morning I achieved one of my targets for using Nottingham Open Data. This was 90% reconciliation of the Licensed Premises dataset (this compares with around 40% when I first blogged about it).

|

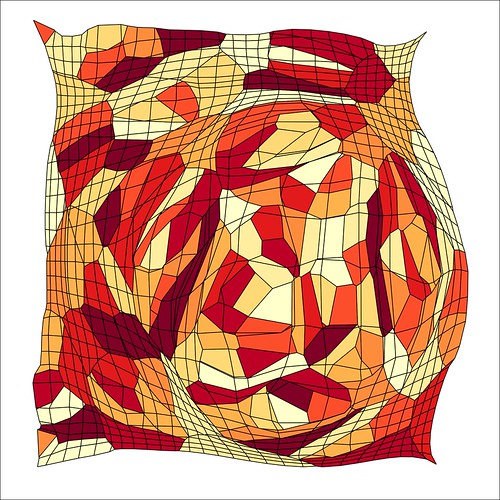

| Tabulation of reconciliation of Nottingham Open Data Licensed Premises File vs OSM (loaded as an image because turning my Excel stuff into an HTML table is a PITA). |

|

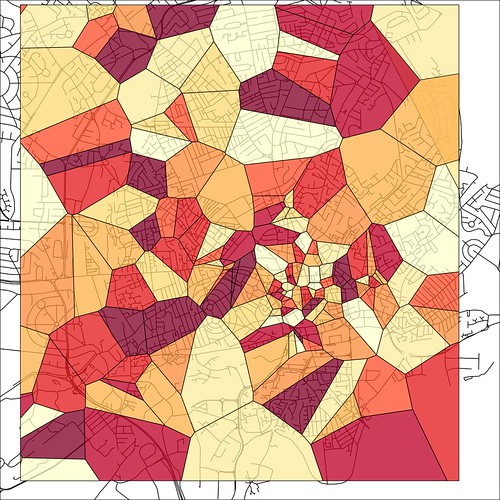

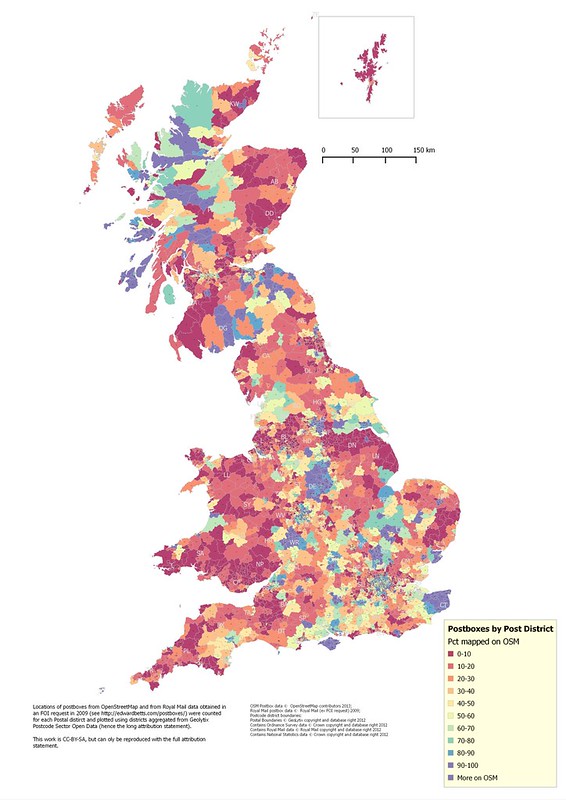

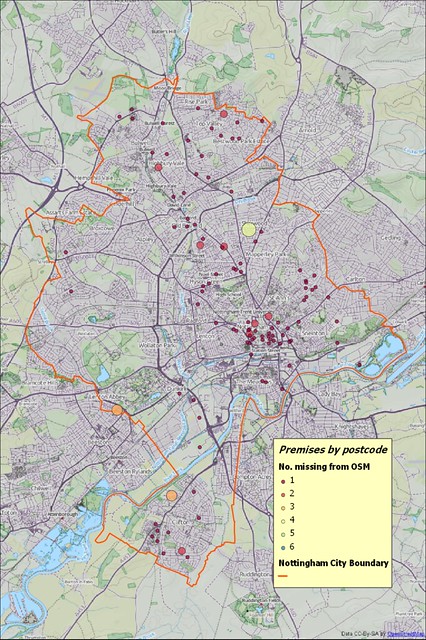

| Licensed premises from Nottingham Open Data not reconciled to OpenStreetMap (plotted as number of premises at postcode centroid) cf. with original map. |

Most mapping sessions have been just over an hour in length, mainly involve photo mapping and seem to generate a huge amount of data. With a small number of exceptions in the City Centre I haven't done repeat surveys. Apart from trying to take lots of photos I have not tried to map everything I came across, which has been my usual approach in the past. I started doing this on my first outing, and took 30 minutes before I mapped my first shop, and had only 10 minutes for other shops, so I was going to take forever doing it my old way. So I stopped worrying about grabbing everything and just tried to get shops, but did collect other information if it was convenient and readily accessible.

I distinguish between these two styles of mapping, by analogy with farming, as intensive and extensive. In one we put all our efforts into maximising yield (of crop, or OSM data) from a small number of hectares; in the other we are happy if the yield is good enough.

What I've done.

- Added postcodes to as many as 5,000 objects. (It's a little difficult to check as I have touched objects which already had postcodes).

- Added around 1,200 different postcodes, about 20% of the city. (Again some may have already been present.)

- Added around 5,800 housenumbers. These are not just retail premises, but houses close to shopping areas, and when I've walked along streets I've tried to add house numbers at intersecting streets.

- Added over 2,500 buildings.

- Taken 7,000 photos. Of which around 6000 are now available on OpenStreetView.

- Recorded about 13 hours of mapping audio.

- Loaded around 200 kilometers of GPX tracks.

- Finish adding POIs for shops (particularly in the City Centre).

- Indoor mapping in the two main Shopping Centres (Malls) in the middle of the city.

- Finish adding building outlines in the retail landuse polygons (I'm tending to do smaller ones first)

Things I've learnt (and why)

- Map all shops in a group together. If a single shop changes use or closes and the row of shops has been mapped it is often impossible to reconcile which shop has been affected. It's far better to be systematic for a small area than mapping patchily. Exceptions can be made for very recognisable buildings or POIs. (This also helps check that POIs are in the correct location, see below).

- Open Data Addresses are great. The Open Data is not accurately geo-located (only to postcode), but it does contain the address. This meant that as long as I could locate the business on Bing aerials I did not need to collect detailed address data. This made surveying less arduous.

- Good high-quality building outlines help. A single building outline covering a whole block is useless. A lot of Nottingham City Centre had building polygons mapped not from aerial photography but from OSGB StreetView. Firstly the building outlines were not very accurate. Secondly, it is very time consuming to divide and correct such amorphous polygons.

- Good photos and; decent aerial photos are critical. I have taken a huge number of photos (all available on OpenStreetView) to assist this mapping. I try and get photos of the roof line as I can correlate chimneys, dormer windows and other roof-line features between the aerial photos and my street level ones. It is amazingly easy to displace a POI a few tens of metres even with all this information.

- Android Apps aren't much use in a City Centre. I made some use of KeyPadMapper3, but found the data often needed to be tweaked because my android phone GPS location wasn't too good. In the City Centre the canyon effect even with a Garmin is too much. A further reason not to use the phone is that I was already using a camera, two GPS (one in the backpack) and a digital voice recorder, juggling these and the phone was too much. The phone did come into it's own when the batteries ran out on the dictaphone. In the end I used the voice recorder for most addresses I collected. I didn't try Vespucci.

- History of POIs is enormously helpful. Most of the errors in the Open Data are failures to update historical data (POIs closing, changing ownership, re-branding, or moving elsewhere). In many cases Nottingham mappers have kept the historical information when updating POIs, and this means that it's mush easier to reconcile OSM with the Local Open Data.

- It's really difficult to tell if some POIs are still open. See the associated post on Vanishing Pubs.

- Night-time surveying is the only way to check the status of some Bars, Nightclubs and Fast Food outlets. I'm too old to be a night owl, so someone else needs to do this.

- POIs change fast. (Well I already knew this) My re-surveying of Market Street, Mansfield Road and Upper Parliament Street/Forman Street, which were all done 2 years ago by Paul Williams enables the rate of change to be quantified.

- A 5% error rate in local government open data seems a reasonable assumption. This is not too different from rates found with NaPTAN and Ordnance Suryey Open Data Locator. It does mean that it's far better to use this data as the basis for survey (as we have done with Locator) rather than import (as was done with NaPTAN).

- Local Government Open Data needs significant interpretation. It is collected for discrete purposes, and there is no integration across data sets. I presume licences are granted for a number of years. Therefore there are no checks as to whether the licence is still in use, or even has ever been used, until renewal time.

- Extensive surveying is more fun, and less exhausting, than intensive surveying. By an intensive survey I mean one intended to collect all types of mapping data in a discrete area. Extensive surveying involves covering a larger area perhaps with some specific targets, but most information is collected as a side product rather than with deliberation.

- It was a good mapping project. A targeted set of POIs makes for a reasonable mapping project over a shortish term.

- More Systematic Coverage. Extensive surveying means more systematic coverage of the city: even if not in great detail.

What to do next

The next steps are fairly obvious.

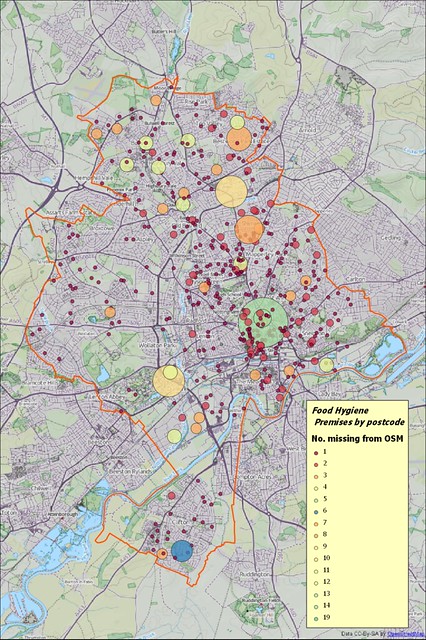

Repeat for Food Hygiene Data. I have an additional data source from the City Council which covers POIs which serve food (anything from fast food outlets to schools and hospitals). This is about twice the size of the Licensed Premises file (2400+ cf. 1200 POIs) and at the moment I have only reconciled 70% of the data. In the main this means checking more day nurseries, care homes and similar establishments.

Change Detection. Build a mechanism for automatically detecting change in the source data. So far I have just used a snapshot of the data, but it would be very useful to find changes in the source data files and use them to drive surveys.

Create additional tools for Food Hygiene data. The Food Hygiene data is actually available for many parts of the UK and is Open Data. There are at least 350,000 POIs available. It is usually safe to assume that it is accurate at the postcode level, but in the nature of retail outlets several are usual present in each postcode. It would be nice to be able to create layers for mapping (e.g., in JOSM, Potlatch etc) which spread the FHRS POIs out around their postcode location preferably ordered by housenumber in the right direction. It would also be good to be able to load subsets of this data as POIs or similar into Garmin or Android devices.

Developing sensible categories for retail. In some of the images in this blog post I have used an ad hoc categorisation of available amenity=* and shop=* values. It would be useful to develop a more considered version of these categories.

|

| Premises from Nottingham Open Data Food Hygiene file not reconciled with OpenStreetMap (cf. with image above). |

Conclusion

The most important thing is that this project would never have started without the availability of Local Government Data. Although I could have tried to find and map retail outlets I would have missed many isolated ones, and would have had no idea how many more there were to find.With retail data mapped systematically it becomes possible to evaluate exactly how we use tags and if there are any obvious improvements. Remember that Nottingham is the 8th largest retail centre in the United Kingdom and is therefore a reasonable exemplar for all but the largest retail centres in Europe and North America.

A consequence of trying to be systematic is that I have visited areas of the city which have had very little on-the-ground mapping. I have been able to collect other POIs, addresses, correct road alignments etc.

Lastly, this is a very productive and rewarding means of mapping. If you have any local open data on shops I recommend a bit of Retail Therapy.